The product design challenges of AR on smartphones

With the launch of ARKit, we are going to see augmented reality apps become available for about 500 million iPhones in the next 12 months, and at least triple that in the following 12 months — as we can now include the numbers of ARCore-supporting devices from Google.

This has attracted the broader developer community to AR, and we’ll see many, many, many experiments as developers figure out that AR is an entirely new medium. In fact, it could be even more profound than that, as through all of history we have consumed visual content through a rectangle (from stone tablets, to cinema, to smartphones, etc.) and AR is the first medium that is completely unbound.

It’s a new medium the way the web was different from print, different in kind, not in scale. In the same way that most of the first commercial websites were “brochure-ware,” where an existing print brochure was uploaded onto a website, the first AR apps will be mobile apps copied over to AR. They’ll be just as terrible as brochure-ware was, though they will be novel!

The initial products in a new medium are successful products from the prior medium copied and forced into the new medium. It’s easier for people to imagine and fit a mental model of the new medium that is still developing. I often hear that AR will be great “to see my Uber approaching” (even though a 2D map does a great job of this). The winning AR apps will take advantage of the new capabilities of the new medium and do things that weren’t even possible in the old medium.

When I worked at Openwave (which invented the mobile phone web browser) in the early 2000s, we used to talk about “mobile native” experiences, as distinct from “small screen websites.” In 2010, Fred Wilson described this concept far better than I could in his widely cited mobile-first post. Hopefully this post will be an echo of his, but for AR.

Apple also recently published some Human Interface Guidelines for ARKit, which look great. I couldn’t find anything similar from Google for ARCore, but will happily add a link here if someone can point me to one. This post goes into a fair bit more detail on the same types of topics based on our real-world learnings.

So what makes an app “AR native”?

I’m going to dig into a bunch of factors that are “special” to smartphone AR, like you’d build with ARKit or ARCore. I am specifically avoiding AR apps for head mounted displays, as HMDs open a whole new range of possibilities for products that aren’t possible on a smartphone (hands-free apps, longer session times and much deeper immersion potential for starters.

So here’s a list in no particular order of things to think about as you consider concepts for your new ARKit app. I’m sure there’s more to be discovered; my friend Helen Papagiannis’ book Augmented Human goes even broader and deeper than I do here, but this list should be pretty comprehensive for now, considering it’s the result of more than 50 years of combined AR smartphone app development experience.

When we are pitched at Super Ventures on an AR app (which is pretty much constantly), it’s easy to tell if the developer hasn’t built an AR-native product, as the simple question “Why do this in AR, wouldn’t a regular app be better for the user?” is often enough to cause a rethink of the entire premise.

Think outside the phone

One of the biggest mental leaps to make is that as a designer/developer, you now need to start thinking outside the phone. You also need to rethink the basics of how a user exercises intent with smart devices in general. The structure of the real world, the types of motion that the phone might make, the other people nearby, the types of objects or sounds nearby, all now play a part in AR product design. Everything happens in 3D (even if your content is only 2D).

It’s a big conceptual leap to design content that “lives” outside the phone in the real world, even though the user can only see it and interact with it via the smartphone glass.

Various interactions/transitions/animations/updates that happen within the app are only one context to consider, as there could be a lot going on “outside the app” to take into account. Once you get your head around this, the rest becomes easier to grasp.

Biomechanics of wrist versus head (versus torso)

The fact that we hold our phones in our hands is a huge design constraint and opportunity. Our wrists and arms can move in ways that our heads just can’t. An app that you can use with one hand can allow movements that an app that needs two hands can’t (as you have to rotate your torso plus have very limited translation without walking).

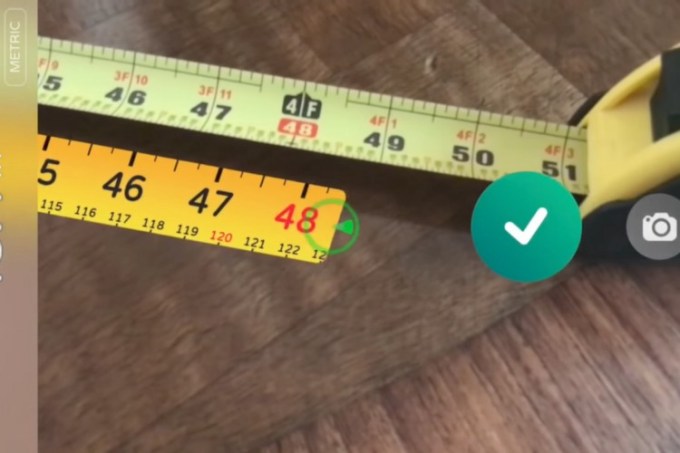

You aren’t going to slide your head along the table to get a measurement! This type of use case should always make sense for smartphone AR.

This means AR apps that don’t require you to be always looking through the display (as your wrist might be pointing away from your eyes) can work well. Two great examples are the “Tape Measure” app and AR apps that let you 3D-scan a small object.

A real-time fast-twitch shooter game could be difficult to make work with mobile AR, as if you are moving the phone around quickly (if not, then why even do it in AR) then it might be hard to see what’s going on.

Why get your phone out of your pocket?

AR developers often forget that we usually have our phones in our pocket/bag when we are moving about. Our imagination of the “user experience” of our app begins when the user taps our app icon (or even once you are in AR-view looking through the camera). It should start much earlier, as one of the big unique benefits of AR is that it can provide real-time context around our location. If the user starts your app 30 seconds too late, there may be no benefit, as they’ve walked past the location the app needs. Facebook or SMS notifications don’t have this problem of being place-sensitive.

How would I even think to get my phone out right now? This is a huge design problem for smartphone AR (and beacons before this). (Image: Estimote)

So there’s a very real and difficult problem in getting a user to get their phone out while they are in the best place to use your app. Notifications could come via traditional push messages, or the user might think to use the app by seeing something in the real world that they want more information on, and they already know your app can help with this (e.g. Google Word Lens to translate languages on menus).

Otherwise, your app just needs to work anywhere, either through using unstructured content, or being able to tap into content that is very, very common. This problem is the No. 1 challenge for all the “AR graffiti” type apps that let people drop notes for others to find. It’s almost impossible for users to be aware that there’s content to find. FYI — this is just another version of the same problem that all the “beacon” hardware companies have, getting the shopper to pull out their phone to discover beneficial content.

This challenge also manifests in a different way when AR app developers think about “competition.” As AR is so wide open, it’s easy to think that the opportunity is there to win. However, AR is always competing with the minutes in our day. We already spend time doing something, so how is my AR app going to compete against that.

AR as a feature, e.g. Yelp with Monocle, Google Lens image search

When we start thinking about ARKit apps, we often immediately think of the entire app experience taking place in “AR mode” while looking at the real world. This just doesn’t make sense, as many actions will work better as if they were a regular smartphone app, e.g. entering a password. Indeed, 2D maps often are a better way to show location data due to the user being able to zoom in/out and having a 360-degree view. Think hard about which parts of the app work natively for AR, and consider building a hybrid-app where AR mode is a feature. Yelp did this with their Monocle feature, as an example.

Going into “AR mode” is a feature of the Yelp app (Monocle), not the purpose of the app. There’s wisdom here.

Interact with the world

At the simplest level, what makes a native AR app is that it enables a degree of interaction between digital content and the physical world (or a physical person). If there’s no digital/physical interaction, then it should be a regular app. Putting this even more strongly, as smartphone apps are our “default” UX, then your AR app should only exist if it does something that can only be done in AR. Interaction with the world can come in three broad ways:

3D geometry: This means the digital content can be occluded by and physically react to the real world structure. Lots of good Tango demos of this, our Dekko r/c jumping off physical ramps, the Magic Leap little robot under the desk are all examples.

The structure of the physical space becomes a key element of the app.

Other people sharing your view and physical space: If your app needs other people physically near you in order to give benefit, then it’s probably AR-native. Good examples of this are an architect showing a client a 3D model on the coffee table, or Star Wars Holochess.

Being able to “share a hallucination” with a friend or colleague in the same physical space is a great native use that AR enables.

Controller motion: This means the content can be static but you physically move your phone around, or the phone could act as a “controller,” like a Wii. This one is borderline IMO. If the content just sits on a tabletop and you can view the content from different points of view by moving your phone around, then personally I don’t see this as AR-native, as the only benefit is novelty value. A smartphone app will probably get better use using pinch/zoom, etc.

Some people argue that the sense of physical scale the user can get by moving around makes it AR-native… The question you need to ask is this: is there any benefit to this interaction beyond novelty value. One way in which the controller (phone) can be used in a native way is in a similar way to a Wii controller, where specific movements of the controller cause action in the content. One example would be a virtual balloon hovering over a desk, and you could “push” it around by physically pushing the controller against the balloon.

Think about whether your use case needs these types of interactions to give the user the benefit you want. If not, then accept this is a novelty (not a bad thing in itself, just set expectations of re-use appropriately around zero) or build a regular app.

Location re-enables scarcity

Back in 2011 at the AWE event organized by my Super Ventures partners (Ori & Tom), Jaron Lanier gave a talk where he raised one of the most fascinating ideas that I’d heard in years. Just as the internet “broke” the concept of scarcity (i.e. infinite copies easily made of digital goods, doesn’t matter where I physically work from, etc.), then AR has the ability to re-enable scarcity. Many, many AR experiences will be tied to the location in which you experience it. Even if the experience can be re-played in a different location, it should always be best in the place for which it was designed. It’s the digital equivalent of a live concert versus an MP3.

This has huge potential for new business models for digital businesses, especially when you start mixing in the potential of blockchain and decentralized businesses. Creative businesses, in particular, could benefit from this as they have been most disrupted digitally by the lack of scarcity, and at least in the music industry, it’s the live events that are generating the most income.

Digital content & experiences can become “scarce” or exclusive again, unable to be infinitely copied and possibly more valuable as a result, as it can only be experienced in a physical location (and maybe at a single time). Similar to the way Live Concerts are more valuable than recordings.(Image: Gábor Balogh)

Pokémon GO is starting to take advantage of this concept, with the “Raids” feature. For example, in Japan, a Pokémon GO raid was set up — it was the only location and time in the world you could have acquired the most valuable Pokémon, Mewtoo.

Another example could be an “AR MMO,” where you have to travel to Africa to visit a specific smith. I expect some very interesting companies will be built around the recreation of digital scarcity.

Don’t get seduced by your (genuinely good) idea

AR is seductive. The more you think about it, the bigger you realize it is going to be, and the more great ideas you have that are completely new. We regularly see startups pitch us with a great idea: They have figured out a problem that needs solving, it can truly become a huge platform — the next Google etc. The problem is that the tech or UX probably doesn’t support it quite yet (or even the next couple of years). The entrepreneur believes in the idea and wants to get started; sadly, the market or tech doesn’t arrive before they run out of their 18 months of runway.

It can be very hard to put on the shelf a good idea you believe in. But you need to, unless you know for a fact (not just really, really believe) that your idea can (a) be built as you imagine it today; and (b) you know someone real who will buy it (i.e. you can give me their name and phone number).

If you can’t do the two things above, you should ask yourself whether your great idea is blinding you to today’s reality.

As a footnote here… a “good” idea doesn’t just mean a cool concept. It means essential. It means an experience that provides a compelling reason to displace a more efficient, socially understood and natural version of that feature in non-AR space.

Field of view is a big constraint

One obvious difference between a smartphone AR app and an HMD AR app is the field of view. HMDs with a large field of view (when they arrive) will feel very immersive; however, smartphone AR will always have two challenges:

- You only have a small “window” into the universe of your AR content (a “magic mirror”). Your friends have an even smaller window, should they want to also see what you do. This can be mitigated somewhat with good tracking.

- The screen on the phone displays what the camera sees, not what you see. The camera orientation, offset to the display, and field of view are different to our eye. If you drew a line from the center of your eyeball onto the world, then held the camera exactly on that line pointing the same way, and the camera field of view was close to what your eye would be, then the camera can appear kind of see-through, like a piece of glass. In reality, it never does, but people don’t mind, and they just look through the screen and ignore what’s not on the screen. (As a side note: There has been some research that does a pretty good job of adjusting the video feed to do a much better job of looking “clear,” but that probably won’t end up in mass-market phones. It’s worth a look, and maybe some experiments, for the curious.)

So what can be done to help with immersion when you’re looking through a tiny window at content that isn’t where your eye would naturally expect to see it?

First accept that users will generally suspend disbelief and accept the AR experience as being digital content in the world.

Think about scale. If your content is physically small, it can fit entirely inside the smartphone glass and camera field of view and avoid clipping at the edge of the screen. For example, a life-sized digital cat will probably give a better experience than a life-size T-Rex. We can apply some learnings from VR here in that playing with scale inversions can create a sense of immersion and feel magical, e.g. a tiny elf that lives behind a potted plant.

If your content is going to be larger than the field of view, then think about some affordances to help with the fact that users can’t see everything. One idea we found worked was to use some fog to cover the digital scene — only what you were looking at would be clear and the edges of the screen appeared foggy. This hinted to the user that there wasn’t anything interesting outside the screen area anyway, but they could pan around and disperse the fog if they wanted to explore a larger area. Anything that’s off-screen should really have some sort of indicator as to where it physically is. The user shouldn’t have to pan around wildly searching for some asset by guessing.

This sense of immersion can be quite difficult to achieve in practice. Getting your product used beyond a one-time novelty experiment is hard and means re-thinking many UX aspects. User testing becomes so much more important as you are starting from the basics. In AR, you have to repeatedly test with the same users over time to get rid of the novelty bias and get real data. It’s a big jump from something that looks impressive on YouTube to something that actually feels good to use.

Accept that you have no control over the scene

One of the biggest differences between an AR app and any other kind of software is that as the developer, you have no control over where in the real world the user decides to start your app. Your tabletop game might need a 2×2-foot playing area, but the user opens the app on a bus, or pointing at a tiny table, or a table covered with plates and glasses. The app is effectively unplayable (or you just use it as if it’s not an AR app), and you end up with two viable options and a third that is still a ways off.

First, give the user specific instructions about where to use the app. Expect them to go to another room, or clear the table, or just wait until they get home. Expect them to have a poor experience the first time they use it in the wrong place. It doesn’t take much to imagine what this will do to your usage metrics. Another way to solve this is to make an app for a very specific use-case so users won’t even think of opening it somewhere it won’t work.

The other viable approach is to design the app with content that is “unstructured,” i.e. it can work anywhere. We eventually figured this out at Dekko, where we started off trying to build a game with structured levels and difficulty, but ended up with a toy car that could just drive around anywhere. If you are thinking of building a game for ARKit, my advice is to build digital toys that can be played with anywhere (virtual remote-control car, virtual skateboard, virtual ball and stick, etc).

Finally, the third and best solution, which isn’t viable because it’s not technically possible yet, is where things will end up. Have a 3D understanding of the scene, then automatically and intelligently place your content in the scene in context to what’s already there. This needs two hard problems solved: real-time 3D scene reconstruction (almost solved) and semantics; and procedural content layout tools.

We’re still a few years away from having all of these solved for developers, so you’ll need to use one of the first two, viable, approaches above.

Moatboat has designed their AR app to let the user place content anywhere, without requiring structure. This type of “free play” is a particularly good fit for AR as the developer doesn’t know what objects the physical scene may contain. The UX works just as well on a flat surface as a messy room, as a barn or some cows can now just be placed on top of the pile of laundry, and fun can be had making them fall off the “mountain.”

The good news is that having no control and giving maximum agency to the user is where the magic happens! It’s the defining aspect of the medium. Charlie did a great talk on this topic.

In addition to the above, you are given an arbitrary coordinate system for your app that is unique to this session. Your app only knows its relative position within this coordinate system; the app starts at XYZ 0,0,0. There’s no persistence, no correlation with “absolute coordinates” (latitude/longitude/altitude) and no ability to share your “space” with someone else. This will certainly change in future (12-18 months) updates to ARCore and ARKit, and there are a few startups working on overlay APIs, but for now, you need to take this into account in your product design.

Before our content can interact with the world, the AR devices need to be able to capture or download a digital model of the real world that accurately matches the real world you are seeing. (Source: Roland Smeenk)

How we naturally hold our phones

In the early days of mobile AR, we optimistically thought people would happily hold their phones up in their line of sight to get the experience of an AR app (we called it the Hands Up Display). We vastly overestimated how willing people would be to change behavior. We vastly overestimated how accepting other people would be of you doing this behavior (remember Glassholes?). Your app will not be the app that changes this social contract. You’ll need your app to work based on how people already hold their phones:

- Hands up for very short periods of time, about as long as it takes to take a photo. This means AR becomes a way to visually answer a question, rather than a way to pursue long-session engagement.

If you expect your users to hold a phone or tablet like this, then your app better only need them to do it for a second or two. (Image: GuidiGo)

- At a 45-degree angle pointing at the ground, like we already do most of the time. This points to apps with smaller-scale content (e.g. 1- or 2-foot-high assets) where the action takes place right in front of you.

This position is much more likely to result in a user using your app for a while. Note though that the real-wold scene becomes much much less interesting and more constrained.

There are a number of ways that the phone sensors can be used to switch modes depending on how the user holds the phone, e.g. your app is a regular 2D map when held horizontally, but switches to AR mode and triggers a visual search instantly when it’s held up. Nokia’s City Lens app managed this UI in a nice way back in 2012. These are good ideas to explore.

Input is disintermediated by the glass

As you test your app, you’ll realize that one of the first things users do when they see your virtual content appear in the real world is reach around the phone and try to touch it, then look confused when they can’t! This is a good sign that you’re building something cool, but it shows the challenge of input and interactions being mediated by a piece of glass that is physically in your hands, while the content may be several feet away.

You’re essentially asking someone to abstract their direct 3D understanding of the world back into 2D gestures on a smartphone screen. This adds cognitive load and also breaks immersion. But big spatial gestures or depending on the presence of others can be equally problematic.

Working out what UI affordances make sense to users that let them select and interact with content that is “over there” can be challenging. Our Dekko r/c car solved this by having the phone be the r/c controller, which has a direct analog in real life, so it made sense to people. With our monkey, and at Samsung where we were interacting with Samsung SmartHome products, it was harder to come up with universal solutions that were immediately intuitive.

Using the design concept of an r/c car meant that users naturally expected the “controller” to be a separate hand-held object that controlled the car at a distance. It solved the problem of the user only being able to touch “the glass” but needing to influence the object some distance away on the table.

Note that just being able to physically “touch” the content doesn’t solve the problem either. If you’ve had a chance to try Meta HMD or Leap Motion, you’ll notice that the lack of haptic feedback just creates another dissociation for the user to work around. IMO, input for AR is one of the biggest problems to really solve before we can hope for mass-market regular daily use of AR.

Opportunity to manipulate video frames of the real world (versus see-through displays)

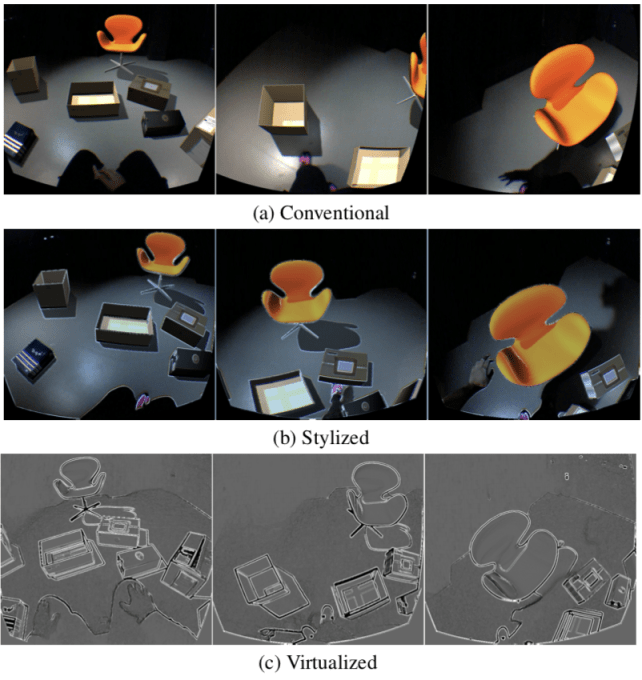

Manipulating the video feed can help make the virtual and real appear more coherent and improve the UX. What is real and virtual in these scenes? Notice how it’s harder to tell as more stylization is applied.

One characteristic of smartphone AR as opposed to see-through HMDs is that the “real world” the user sees is actually a video feed of the real world. This gives opportunities to manipulate the video feed to improve aspects of your app. Will Steptoe did some great research back in 2013 before he joined Facebook, where he showed that by subtly stylizing the video feed of the real world, it became harder to tell virtual and real content apart, thus increasing immersion. This is a really interesting idea, and could be applied in innovative ways to ARKit apps.

“App” concept for context switching versus native AR UI

Smartphone AR apps, by nature of the OS desktop being what the phone boots into, rely on the smartphone app metaphor to open/close “apps” to switch contexts (e.g. switch between my messaging app versus a game. I probably don’t want both of those in one app and the app trying to figure out what I really mean to do). You will dip into and back out of AR view (looking through the camera) constantly, switching contexts each time, driven by the user.

This really simplifies things as you don’t have to worry too much about dynamic context switching (e.g. when I point my phone at a building, do I want AR to display the address, the Yelp review, Trulia apartment prices, the architectural history, which of my friends are inside?). It’s a seductive idea to try to be the umbrella app with lots of different use cases supported (“I’m a platform!”), but understanding what the user wants and showing that is an incredibly challenging problem to solve. (Probably too hard for anyone who is learning anything from this article.)

Skeumorphism is good

AR is a brand new medium, and users simply don’t know how to use it. There are lots of really clever ways to design interactions, but users won’t be able to grasp your cleverness. By replicating the “real” thing you are digitizing, then users will instinctively understand how to use your app.

At Dekko we built this amazing little monkey character that people loved, but they had no idea what to do with a little monkey on the table. When we switched to an r/c car that looked like an r/c car, we didn’t have to explain anything. At Samsung we prototyped receiving a phone call in AR. There are so many cool ways to do this (e.g. a Hogwarts owl could fly over and land in front of you with a message), but the idea that user-tested the best was pretty much copying the look and feel of your iPhone phone app, with green circles to answer, red to ignore, etc.

It’s important to note that it won’t be 2D skeumorphism — it’s likely a new 3D symbolic language will evolve that uses spatial clues (shadows, shading, transparency, etc.) but without necessarily needing to be photo-realistically faithful to the real-world objects.

Making your digital objects literally look exactly the same as physical objects that users already know how to use is a good idea when the entire experience is alien to a user.

The proof of this comes only by user-testing your app with users who have never used AR before. I guarantee that you will be shocked at how much you need to simplify and make things literal in order to avoid explaining what to do.

Affordances for users who won’t believe what they see

Something we observed that was hard for us to accept, being immersed in AR all the time, was that users often did not believe what they saw. They couldn’t believe that the content was actually “in the 3D world” versus on the screen.

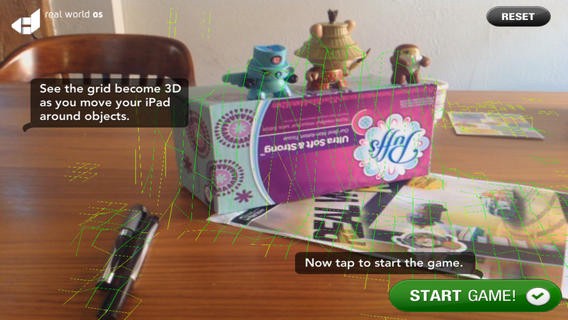

Making liberal use of affordances and subtle signals to show some connection to the real world will really help users understand what is going on. I can’t overstate the importance of at least a drop-shadow, if not dynamic shadows and light sources. Putting grid lines or other means of “painting” the world separately from your interactive content also were very effective at helping users understand.

We had to include grid lines and colorize the edges of the grid to highlight that the app understood depth and 3D, and hide other parts, all to signal to the user what was going on “under the hood” with the technology in a subtle way. This helped them trust that the app would behave in ways that were hard for them to understand or believe.

Another affordance that is difficult but hugely effective is that animated responses of the virtual object to collisions, occlusions, phone motion and control input are critical to having the object appear “real.” Stable tracking (without drift) is only the first part of this. Study virtual characters “walking” in AR and you’ll often pick up that their feet “slide” a little on each step, which feels wrong and breaks presence. Same as if the character “bumps into” a physical structure and doesn’t respond as you would expect.

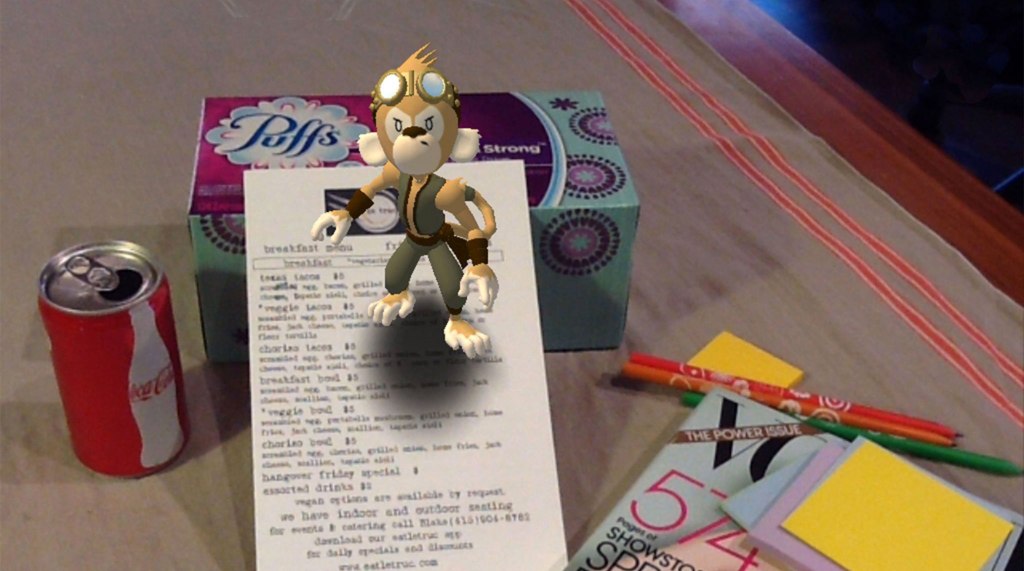

Cues like eye contact with the camera really feel good. A big part of getting this right is choosing the right type of character to put into your AR scene. The more realistic your character, the more subconscious expectations the user will have regarding what is “right.” The uncanny valley is significantly more of a problem for AR than for animated movies, because we have the context of the real world all around. We found that consciously selecting characters that are not “real” and more cartoony or fantastical ensured people’s expectations of their behavior in the real world were not pre-set, and flaws in the animation were less off-putting. Think Roger Rabbit, not Polar Express.

One big issue that affordances highlight is content overlap and information density. Most AR demos show a happily laid out space with labels all neatly separated and readable. As exhibition designers and architects know, designing labels and signs in 3D space to be readable and understandable while moving is really hard.

Character and asset design

Huck’s design of the Dekko monkey really connected with people and they loved it. Even today (3-4 years later) I still have random people ask me about it.

When thinking about which assets and characters to use in your app, we discovered some pretty workable approaches that seem to be spreading across the industry. Silka did a lot of research and user testing of various ideas for our Dekko monkey back in 2010. She found that “Pixar for adults” was the perfect way to think about sophisticated, lo-poly-count models that didn’t try to cross the uncanny valley, yet with some affordances really looked good in the real world. This style was accessible to both women and men, children and adults, and could work globally culturally.

She felt that digital versions of KidRobot collectible vinyl toys were perfect, and she reached out to KidRobot’s top designer, Huck Gee, who designed our monkey and has since become a great friend (seriously, hire him to design your AR characters, he’s amazing — hit me up for his contacts).

She felt that digital versions of KidRobot collectible vinyl toys were perfect, and she reached out to KidRobot’s top designer, Huck Gee, who designed our monkey and has since become a great friend (seriously, hire him to design your AR characters, he’s amazing — hit me up for his contacts).

A few years later Natasha Jen from Pentagram independently came to almost identical conclusions for a Magic Leap design project, including the KidRobot inspiration. This video discusses her thinking (around the 30-minute mark).

The latest demos for ARCore show a similar style, especially the Wizard of Oz characters.

So what?

I’m hoping that sharing these learnings will reduce a couple of product iteration cycles of learning and that products that users engage with will come to market sooner. I’ve been seeing YouTube demos of great concepts for almost 10 years, and all I want is to use some great apps. There’s a big difference and a larger chasm to cross than many people realize.

If I could end this whole post with one lesson to take away, it would be this: put an experienced AR, UX or industrial designer onto your team in a senior role and user test, user test, user test every little thing. Good luck!

Thank you to…

This post would not have been possible without the help of some friends who have a far better understanding of the AR UX than I ever will, and have been working on solving real AR product UX problems for at least five years each:

- Charlie Sutton led design on our AR team at Samsung and previously led a team at Nokia designing AR products (little known fact… Nokia was a world leader in AR in the late 2000s and still has one of the richest AR patent portfolios), and is now working at Facebook.

- Paul Reynolds is building Torch to make creating native AR/VR apps simple for developers and designers. Paul previously led the SDK team at Magic Leap, figuring out the intersection of apps and platform for AR.

- Mark Billinghurst is one of the world’s leading augmented reality researchers and is one of the few industry legends when it comes to AR interactions. Mark has been researching this domain for more than 20 years and his work is almost certainly cited in whichever books or papers from which you learned AR/VR. Mark is currently working at the Empathic Computing Lab in Adelaide and previously worked at HIT Lab NZ; most of his work is published at these sites. He also is a good friend and one of my partners at Super Ventures.

- Jeffrey Lin, PhD is a world expert on how visual perception is represented in the brain. He took those learnings to design products at Valve and Riot Games before joining Magic Leap as Design Director. His medium posts are a wealth of AR design knowledge.

- Silka Miesnieks, my wife, has worked designing AR products since 2009, and co-founded Dekko with me and figured out AR UX problems that many AR people still aren’t thinking about. She now leads The Design Lab at Adobe helping figure out the future of immersive design tools for people who aren’t engineers.

All the errors herein are mine and result from me not translating the advice I received into the post above. You should follow all of them on social media, ask them for help, take their advice and try to poach them away from their current jobs if you can!

Featured Image: Hero Images/Getty Images

Published at Sat, 02 Sep 2017 16:00:09 +0000